In our last blog, we talked about why it’s important to set up A/B Tests to track changes and visitor behavior. This week we focus on how to create personalized tests and we take an in-depth look at a successful case study for ProClip USA; the exclusive North American distributor of ProClip in-vehicle mounting solutions.

If you have ever test driven a car, chances are you drove the model with all the bells and whistles. Car models all start out the same way, with the basics, and then features are added: air conditioning, sun roof, leather interior and automatic windows. The point is that multiple versions of one model of car exist, save for a few variables. This is a great way to understand A/B testing. By changing a variable in your split tests, you can track consumer behavior which in turn helps you build a race track to your own success.

ProClip’s car mount products are custom fit for each vehicle model and for numerous mobile devices, from iPads to GPS devices. Their customer base is broad, servicing original equipment manufacturers, vehicle dealers, police and emergency vehicles, sales and delivery, leasing companies, construction industries and the average consumer. ProClip’s car mount products are made to service a large customer base in the United States and as part of the effort to increase sales, ProClip and Acumium set up an email blast with an A/B test earlier this year to figure out whether or not an email blast which included a discount offer would drive more sales than one that did not.

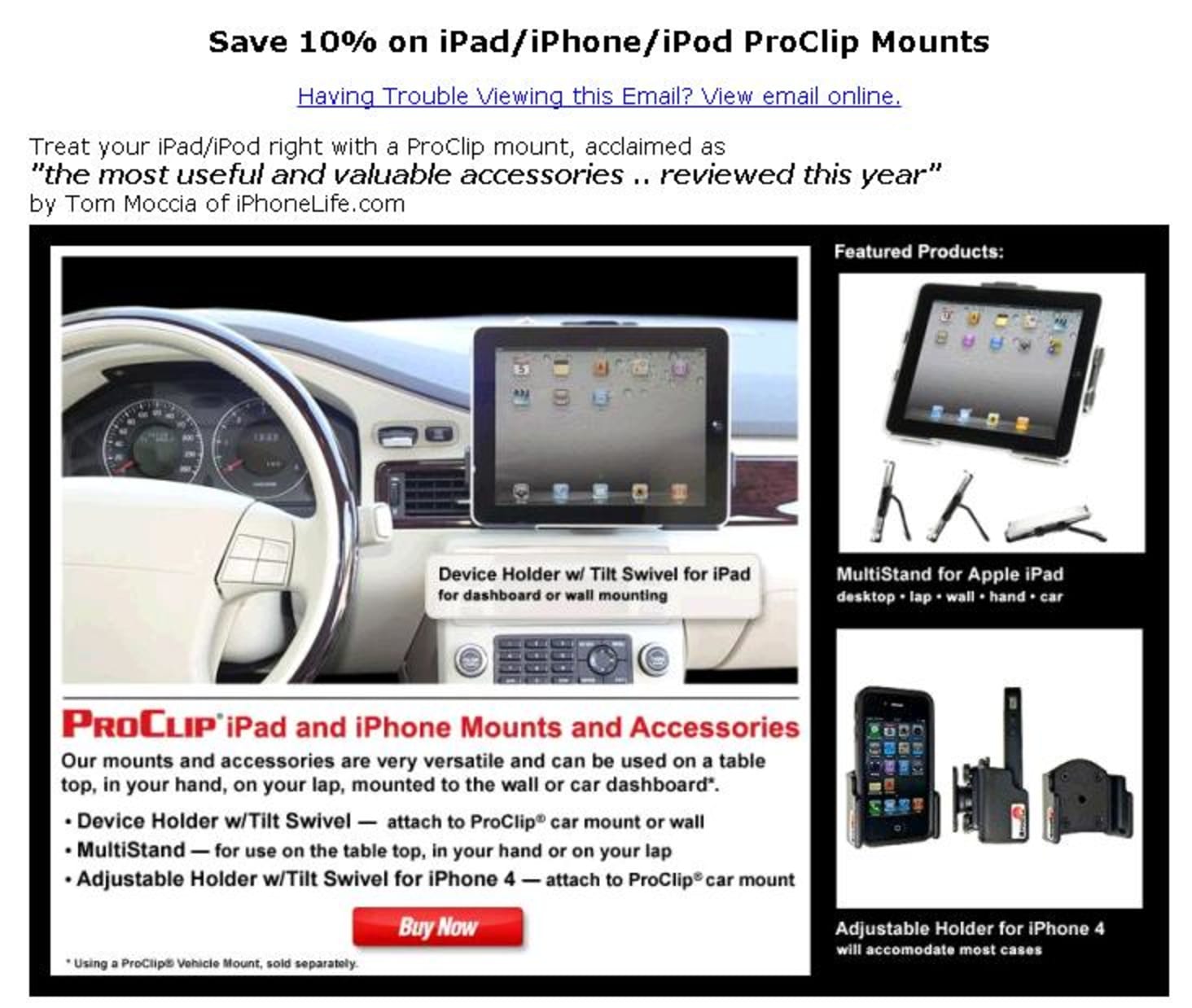

One of the first things ProClip did was determine who would receive the emails. Thousands of customers were selected to receive the email, based on their past purchases of iPad/iPhone/iPod ProClip Mounts. Half of the list would receive a typical ad (the “control”) encouraging the consumer to click on the ad with a 10% discount. The other half would receive the variables; the exact same ad encouraging the consumer to click on the ad, but with no 10% discount.

The 10% discount email ad looked like this:

Not seen here: The bottom of the ad contained text that would allow a potential customer to opt out of the mailing list. The ad was basic and informative and offered clients a discounted rate. It did well when it came to click-throughs and conversions.

Overall, the results were fairly successful. 38.4% of the mailing list actually opened the email to look at it, with 23.7% of those actually clicking on the ad to open the website. Of the participants, 81.8% were new clickers (past customers who had never participated in an email campaign before) and 18.2% were active participants in the past. Overall, the total percentage of potential clients who clicked the ad registered around 9.1%. By tracking these click-throughs and customers on the back-end, ProClip was eventually able to determine a conversion rate per opened email of 2.6% with a 10.8% conversion rate for active clicking participants. In addition, we were able to determine what products were wielding the highest clicks; invaluable information.

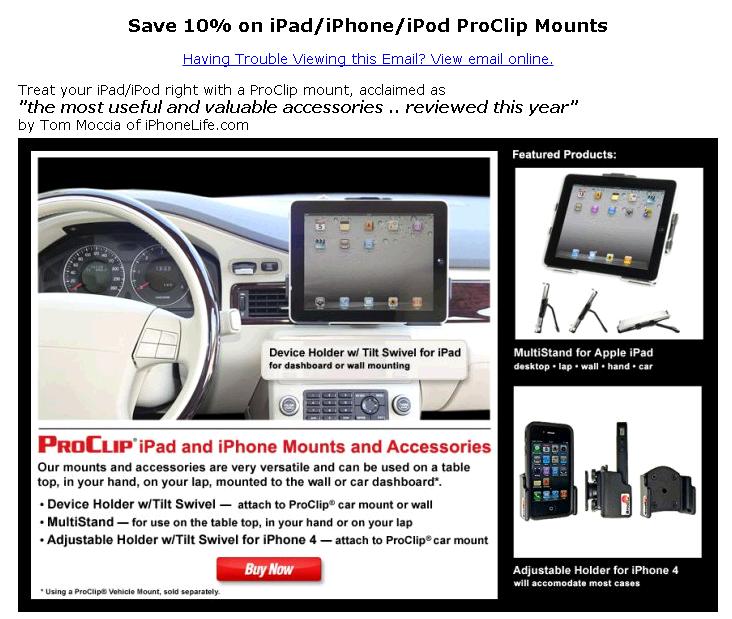

The true test was the second half of the email blast with the ad variables. If you had to guess, which ad do you think would do better: the ad with the 10% discount or without the 10% discount?

The second ad without the discount looked like this:

The overall percentage of people who clicked on this ad was 10.7% compared to 9.1% from the 10% off ad, but more surprising were the unique email opens: an impressive 47.9% compared to the first email’s 38.4%! Are you surprised by the results? Could you have imagined the ad without the discounted rate would out-perform the first test of emails? Before we begin to analyze the email results, the overall conversion rate of sales were actually 161% higher with the discount than with the control group.

When offering a discount, the profitability is always a concern and can be a debatable cost-to-benefit discussion. We compared the two tests and found a 166% increase in orders from the 10% discount but only a 6.3% decrease in average order value. This means that although ProClip offered 10% off, the average purchase was 3.7% higher than those without the 10% offer. This information is very interesting when deciding the benefit of sales in comparison to a percentage discount, because even though you are lowering profit margins with a discounted product, it may be worth the discount overall. In this case the 6.3% order discount value was worth the 161% increase in generated sales.

Consumer behavior can be unpredictable, but A/B testing is the simplest and fastest approach to testing the success of changes. Who would have guessed that there would be higher participation without an offer, but a higher conversion rate with an offer?

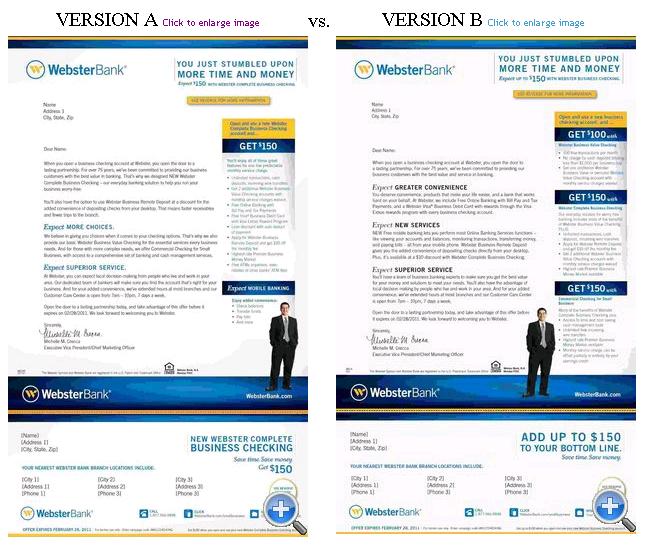

A/B testing can be applied in a variety of ways than email blasts. A great example is a graphic display A/B test from Anne Holland’s visual

test. The test asks the viewer to look at two web pages and discern which one yielded the highest results in an A/B test based on the

graphics alone:

What do your instincts tell you at first glance? The actual test results revealed Version B to be the better of the two, with 37% more prospects convinced to open business checking accounts with Webster Bank as a result. Holland writes, “According to age-old, direct marketing best practices, adding offer choices usually kills response rates. So, we love this test because it proves sometimes testing ‘worst practices’ is worthwhile!” Webster Bank’s success with their multiple-offer letter and ProClip’s success with their non-discounted ad show the best way to

get to know your consumer is to test.

Creating your own A/B test should not be intimidating. The best thing you can do to start is arm yourself with knowledge and have an idea about how long it will take to run a test before you move on. You want to repeat variations when possible and create impeccable tracking methods. Like Anne Holland suggests, think outside the box when it comes to “best practices” of marketing.

Chances are you wouldn’t buy a new car without test driving it, right? Your ultimate goal in measuring success is to create a better experience for your consumers and drive results.

Please feel free to get in touch with us as we continue this ongoing series on Measuring Success. Have you performed an A/B test with surprising results? How do you track your conversions? Let us know!